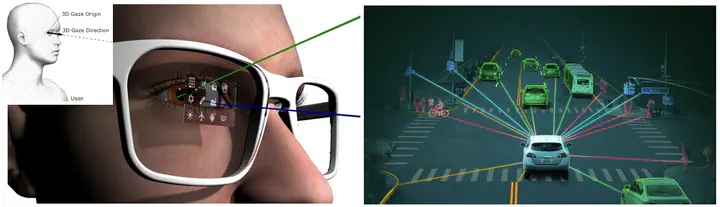

Application Research — AR/VR & Autonomous Driving, and more.

Abstract: My application-oriented research investigates how advances in computer vision and multi-modal learning can be deployed in real-world systems such as AR/VR and autonomous driving. In AR/VR, I focus on human-centric AI technologies including eye tracking, neural rendering, and spatial perception to enable immersive and adaptive user experiences. For autonomous driving, I study robust visual perception methods such as visual SLAM, object detection, and sensor fusion that combine camera, LiDAR, and other sensing modalities for safe and reliable navigation. By grounding algorithmic innovations in practical domains, my work bridges foundational research with high-impact applications that push the boundaries of intelligent systems.

Research Idea

Ex. Application Domain (AR/VR, Autonomous Driving)

In this line of research, we aim to translate advances in visual geometry and multi-modal learning into deployable systems for AR/VR and autonomous driving. Unlike offline benchmarks, these domains demand real-time latency, safety guarantees, on-device efficiency, and robustness to long-tail conditions (e.g., motion blur, low light, domain shift).

Our approach couples geometry-aware perception with language-grounded reasoning and multi-sensor fusion (e.g., camera, IMU, LiDAR, eye-tracking). We build representations that are equivariant/invariant to 3D transformations, estimate uncertainty for safety-critical decision making, and leverage post-training (SFT, preference/RL) to align models with task constraints and human factors.

Relavant Website (But, not limited)

- Gaze 2024 workshop in CVPR : https://gazeworkshop.github.io/2024/

- Image Matching 2025 workshop in CVPR : https://image-matching-workshop.github.io/

- Visual SLAM with COLMAP: https://colmap.github.io/

Ex. Document Understanding and Information Extraction (Extension of LG AI Resaerch)

Another application focus of my research is document understanding and information extraction, where vision–language models are extended to text-rich and visually-structured data such as PDFs, forms, scientific papers, and multi-page reports. Unlike conventional AR/VR or autonomous driving, this domain requires models to jointly reason over textual content, visual layout, and cross-page context. Recent works at CVPR 2025 highlight several promising directions:

LLM-based understanding (DocQA)

- DocLayLLM: An Efficient Multi-modal Extension of Large Language Models for Text-rich Document Understanding

- Marten: Visual Question Answering with Mask Generation for Multi-modal Document Understanding

- Docopilot: Improving Multimodal Models for Document-Level Understanding

- A Simple yet Effective Layout Token in Large Language Models for Document Understanding

Multi-modal retrieval

- Recurrence-Enhanced Vision-and-Language Transformers for Robust Multimodal Document Retrieval

- VDocRAG: Retrieval-Augmented Generation over Visually-Rich Documents

- DrVideo: Document Retrieval Based Long Video Understanding

- Document Haystacks: Vision-Language Reasoning Over Piles of 1000+ Documents

Document Parsing (Document Layout Analysis)

- OmniDocBench: Benchmarking Diverse PDF Document Parsing with Comprehensive Annotations

- DocSAM: Unified Document Image Segmentation via Query Decomposition and Heterogeneous Mixed Learning

Robustness in Documnent Layout Analysis

- Robustness in DLA

- RoDLA- Benchmarking the Robustness of Document Layout Analysis Models (CVPR 2024)

- DocRes- A Generalist Model Toward Unifying Document Image Restoration Tasks

- Geometric Representation Learning for Document Image Rectification